Written by Dave Shackleford

June 2020

Introduction and Background

Security professionals are starting to rethink the way they approach access control and the security models of interconnected systems and user access.

First, they’ve started looking at the entire environment as potentially untrusted or compromised versus thinking in terms of “outside-in” attack vectors. Increasingly, the most damaging attack scenarios are almost entirely internal due to advanced malware

and phishing exercises that compromise end users. They’ve also realized they need to better understand application behavior at the endpoint—security and operations teams need to look much closer at what types of communication-approved applications really

should be transmitting in the first place. Similarly, organizations also need a renewed focus on trust relationships, and user-to-system and system-to-system relationships in general, within all parts of their environment. Most of the communications seen in

enterprise networks today are either wholly unnecessary or not relevant to the systems or applications needed for business. Creating a more restricted model around these communications altogether may significantly improve both security and IT operations.

These are all worthwhile goals, but many traditional controls are not capable of accomplishing them. Compounding this problem is the advent of highly virtualized and converged workloads, as well as public cloud workloads that are highly dynamic in nature. Hybrid cloud applications often will send traffic between on-premises and external cloud service environments, or between various segments within a cloud service provider environment.

The nature of workloads is changing, too. For instance, it’s rare that a workload will be started in Amazon Web Services (AWS) or Azure and operate statically without being modified or accessed by another cloud service or even a different cloud platform.

Although there are many tools and controls available to help monitor internal workloads and data moving between hybrid cloud environments, enterprises need to adopt one overarching theme when designing a dynamic security architecture model—one of zero trust.

Zero trust is a model in which all assets in an IT operating environment are considered untrusted by default until network traffic and behavior is validated and approved. The concept began with segmenting and securing the network across locations and hosting models. Today, however, there’s more integration into individual servers and workloads to inspect application components, binaries and the behavior of systems communicating in application architecture. The zero trust approach does not involve eliminating the perimeter; instead, it leverages network micro-segmentation and identity controls to move the perimeter in as close as possible to privileged apps and protected surface areas.

One of the more common questions many security and operations teams face is: Is zero trust practical? Although the answer is somewhat subjective, depending on the organization, goals, scope of application and timeframe, the technology exists today to successfully implement a zero trust access control model. Zero trust projects do require significant investments in time and skills, however.

The zero trust approach does not involve eliminating the perimeter; instead, it leverages network micro-segmentation and identity controls to move the perimeter in as close as possible to privileged apps and protected surface areas.

Elements of Zero Trust

In order to implement a zero trust architecture model, security and operations teams will need to focus on two key concepts. First, security will almost always need to be integrated into any workloads and move with the instances and data as they migrate between internal and public cloud environments. By creating a layer of policy enforcement that travels with workloads wherever they go, organizations have a much stronger chance of protecting data regardless of where the instance runs. In some ways, this focus does shift security policy and access control back to the individual instances, versus within the network itself, but modern architecture designs don’t easily accommodate traditional networking models of segmentation.

Second, the actual behavior of the applications and services running on each system will need to be much better understood, and the relationships between systems and applications will need more intense scrutiny than ever, to facilitate a highly restricted,

zero trust operations model without affecting application and service connectivity adversely. Dynamic assets like virtual instances (running on technology such as VMware internally or in AWS or Azure) and containers are difficult to position behind “fixed” network enforcement points, so organizations can adopt a zero trust microsegmentation strategy that allows traffic to flow only between approved systems and connections, regardless of the environment they are in.

In addition to these overarching themes, there are three distinct technology elements that comprise a robust zero trust strategy. As shown in Figure 1, these elements are user identities, device identities, and network access and access controls.

User Identities

The first foundational element of a zero trust model is identity and access management (IAM), specifically focused on users and role-based access to and integration with applications and services. Most application and service interactions have some tie-in to roles and privileges (users, groups, service accounts and the like), so ensuring that any zero trust technology can interface with identity stores and policies in real time and enforce policy decisions on allowed and disallowed actions makes sense. IAM is a huge area to cover, encompassing everything from user directories to access controls, authentication and authorization. To facilitate a trusted communications model, which is at the heart of a zero trust design, organizations should consider IAM a primary area of focus and resource commitment.

Device Identities

The second identity-related aspect of zero trust focuses on device identity. This, in many ways, is much broader and more complex than user identities (which often are tied to directory services, such as Active Directory, and may be streamlined with federation and single sign-on tools and services). Device identities need to encompass a broad array of different device types ranging from end user equipment like mobile devices and laptops to static servers in a data center and virtual machines and containers running in the cloud.

Ensuring that any zero trust technology can interface with identity stores and policies in real time and enforce policy decisions on allowed and disallowed actions makes sense.

Network Access

The third major component of a traditional zero trust design model is network segmentation that is closely aligned with a specific type of system or workload (often termed micro-segmentation). Each type of system and user/privilege association will also have a defined set of network ports and protocols associated with application use and system–system and user–system interaction. In addition to finite indicators of network services and protocols, a sound zero trust approach also should be capable of accommodating network behavior patterns. For example, a user session coming from a trusted device should be allowed to access certain network services and platforms, but this session should not stay open for longer than a normal workday. Another example might be two servers or containers communicating as part of an application workflow (which is allowed), but this should not happen more than several times per minute at most. (The number of connections or established communication sessions has a “normal” range within a defined time period.)

Zero Trust, Then and Now

When the concept of zero trust began to gain traction in the information security community, the primary focus was on network micro-segmentation seeking to prevent attackers from using unapproved network connections to attack systems, move laterally from a compromised application or system, or perform any illicit network activity regardless of environment. Now, with the aggregation of network communications, user identity and device identity, zero trust facilitates the creation of affinity policies, in which systems have relationships and permitted applications and traffic, and any attempted communications are evaluated and compared against these policies to determine whether the actions should be permitted. This happens continuously, and many types of zero trust control technology also include some sort of machine learning capabilities to perform analytics processing of attempted behaviors, adapting dynamically over time to changes in the workloads and application environments.

By potentially eliminating lateral movement, a zero trust microsegmentation model also reduces the post-compromise risk when an attacker has illicitly gained access to an asset within a data center or cloud environment. Security architecture and operations teams (and often DevOps and cloud engineering teams) refer to this as limiting the “blast radius” of an attack, because any damage is contained to the smallest possible surface area, and attackers are prevented from leveraging one compromised asset to access another. This limitation works not only by controlling asset-to-asset communication, but also by evaluating the actual applications running and assessing what these applications are trying to do. For example, if an application workload (web services such as NGINX or Apache) is legitimately permitted to communicate with a database server, the attacker would have to compromise the system and then perfectly emulate the web services in trying to laterally move to the database server (even issuing traffic directly from the local binaries and services installed).

Common Challenges in Zero Trust

Implementing a zero trust access control model isn’t necessarily simple. Organizations have encountered numerous challenges along the way in their zero trust journeys. Some of these are presented in Figure 2 and detailed as follows:

Technology silos —

Most organizations have a wide variety of technology in place that likely includes legacy operating systems and applications, development tools and platforms, third-party applications and services along with “homegrown” applications and many more. Commonly,

some of these technology types don’t work well with others, leading to silos of technologies that don’t work well together.

Examples might include a single primary network vendor that doesn’t interoperate with other network tools, hardware limitations or a specific OS version that doesn’t support updates to critical applications without performance issues. Zero trust architecture and technology usually requires a broad degree of access to infrastructure and platforms, and a large number of technology silos in place

could diminish its effectiveness.

Lack of technology integration —

For zero trust technology to provide the most benefit, some degree of technology integration will be necessary; for example, endpoint agents will need to be installed on systems and mobile devices, and any issues with this integration can easily derail a zero trust implementation.

Similarly, the policy engine for zero trust should be able to integrate with user directory stores in use to continually assess accounts, roles and permissions.

One of the more pressing technology integration hurdles can emerge when third-party solutions and platforms aren’t available or won’t run on specific cloud provider infrastructure. As more organizations move to a hybrid or public cloud deployment model, this incompatibility can prove to be a crippling issue for many.

Rapidly changing threat surface and threat landscape —

The threat landscape is constantly changing, of course, which can potentially lead to challenges with some types of zero trust technology that only focus on one type of environment or are limited in deployment modality. For example, many attackers are

now targeting end users as the primary ingress vector to a network and can functionally “assume” that user and device identity. A zero trust technology that doesn’t incorporate strong machine learning with behavioral modeling and dynamic updates will likely miss some of the most critical types of issues we see today with attacks. Some of these include:

• Lateral movement between systems—This is a common scenario in today’s attack campaigns in which initial ingress into a network environment is usually followed by probes and attempts to compromise additional systems nearby. Detection and prevention of lateral movement requires an understanding of trust relationships within the environment, as well as real-time monitoring and response capabilities.

• Insider threats—These are notoriously difficult to detect because insiders usually already have access granted, thus potentially limiting the efficacy of identity- or endpoint-specific controls in a zero trust design. Only deep understanding of behaviors that are expected or unexpected in specific user-oriented interaction with data and applications can help in detecting insider threats.

Lack of simplicity and ease of use —

The more complicated a security technology is, the less likely it will be rolled out effectively or consistently applied. One of the bigger challenges with zero trust and micro-segmentation tools today is the complexity and lack of simple administration and policy design/implementation. An intuitive console and policy engine, well-designed reporting and metrics, easy installation and monitoring for endpoint and workload agents, and strong support for numerous technologies and platforms (especially cloud environments) are critical for any enterprise-class technology that seeks to help organizations implement zero trust.

The New Concept of Zero Trust

As organizations look to implement zero trust technologies, it’s critical to keep in mind that no single technology platform or service can wholly deliver zero trust. To achieve a zero trust access control posture, organizations need to think through the following aspects of how their environment operates.

Defining Trusted Users and Trusted Devices

To get started, a discovery effort is critical. Most micro-segmentation and zero trust technologies include some form of scanning and discovery tools to find identity use and privilege allocation, application components in use, traffic sent between systems, device types, and behavioral trends and patterns in the environment.

Security teams should work with IAM teams or those responsible for key IT operations roles and functions such as user provisioning and management to understand the different groups and users within the environment, as well as the types of access they need to perform job functions. The same should be done for all types of user devices, primarily laptops and desktops in use by privileged users.

It’s critical to keep in mind that no single technology platform or service can wholly deliver zero trust.

Integration of Identity (User/Device) and Network

After some basic discovery has been conducted, any mature access control (microsegmentation) policy engine should be able to start linking detected and stated identities (user, groups, devices and privilege sets) with network traffic generated by specific services and application components across systems. To get the most benefit from a zero trust strategy, this stage of planning and project implementation needs to carefully accommodate business- and application-centric use cases. Security teams should plan to evaluate what types of behavior are actually needed and necessary in the environment, versus those that may be simply allowed or “not denied” explicitly. This activity takes time and careful analysis of systems that are running.

Where Remote Access Fits

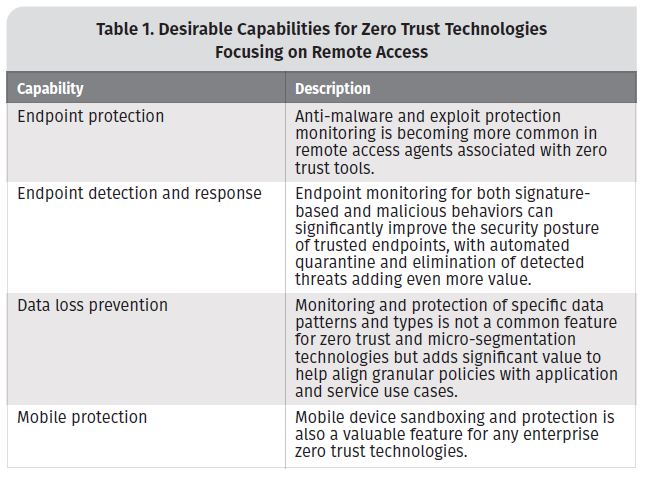

One other very critical element of zero trust planning and implementation is increasingly common remote access and remote work arrangements for a wide variety of employees. Zero trust technologies that also focus on remote access may include some of the capabilities shown in Table 1.

Organizations should keep the following general best practices in mind for implementing zero trust tools and controls:

![]() Start with passive application discovery, usually implemented with network traffic monitoring. Allow for several weeks of discovery to find the relationships in place and coordinate with stakeholders who are knowledgeable about what “normal” traffic patterns and intersystem communications look like. Enforcement policies should be enacted later, after confirming the appropriate relationships that should be in place, along with application behavior.

Start with passive application discovery, usually implemented with network traffic monitoring. Allow for several weeks of discovery to find the relationships in place and coordinate with stakeholders who are knowledgeable about what “normal” traffic patterns and intersystem communications look like. Enforcement policies should be enacted later, after confirming the appropriate relationships that should be in place, along with application behavior.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

A zero trust architecture should include authentication and authorization controls, network access and inspection controls, and monitoring/enforcement controls for both the network and endpoints. Remember, no single technology currently will provide a full zero trust design and implementation—a combination of tools and services is necessary to provide the full degree of coverage needed. For most, a hybrid approach of both zero trust and existing infrastructure will need to coexist for some period of time, with emphasis on the common components and control categories that could suitably enable both, such as identity and access management through directory service integration, endpoint security and policy enforcement, and network monitoring and traffic inspection. As zero trust frameworks mature and evolve, so will standards and platform interoperability, likely facilitating more streamlined and effective approaches overall.

Building the Business Case for Least Privilege

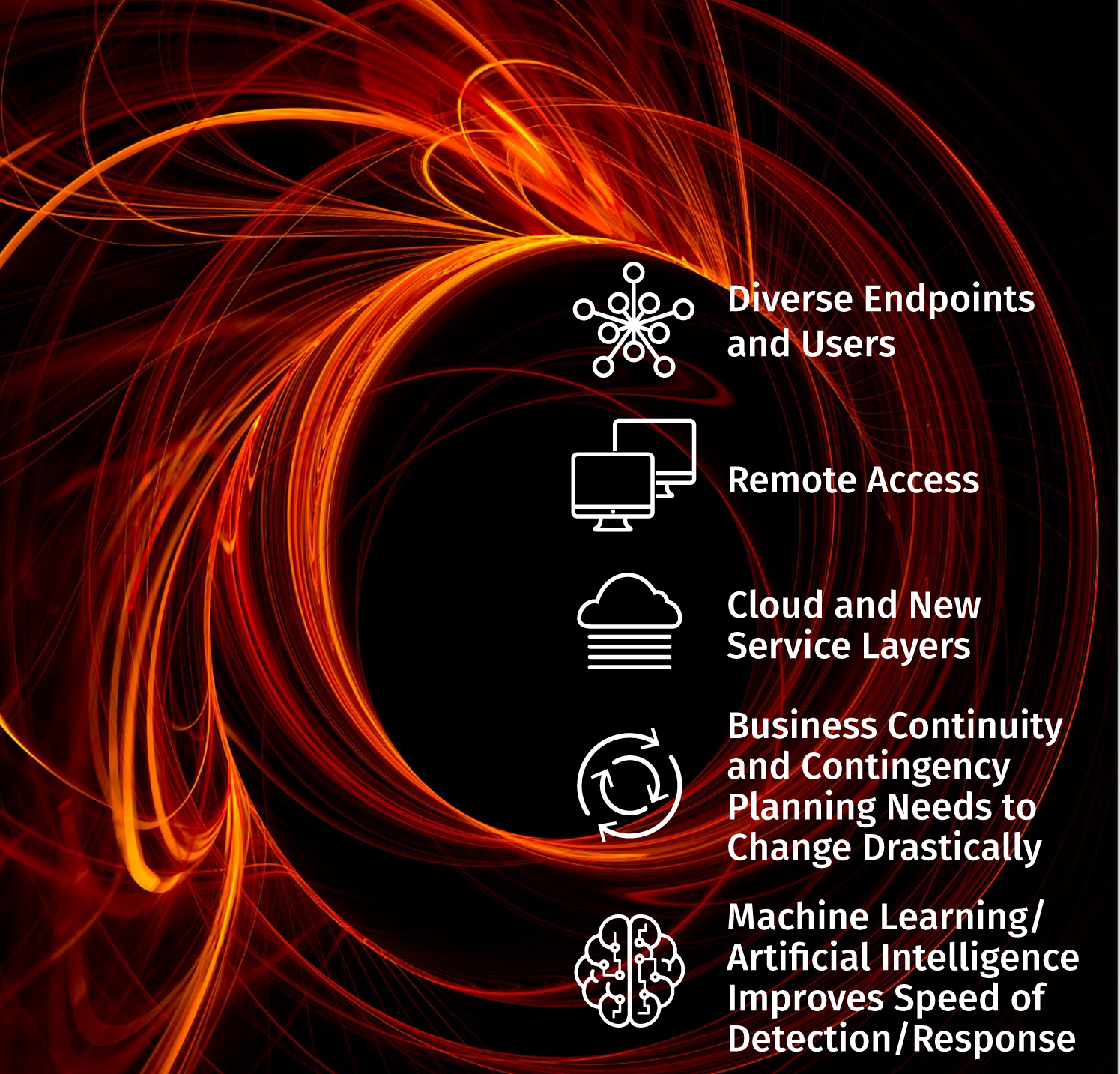

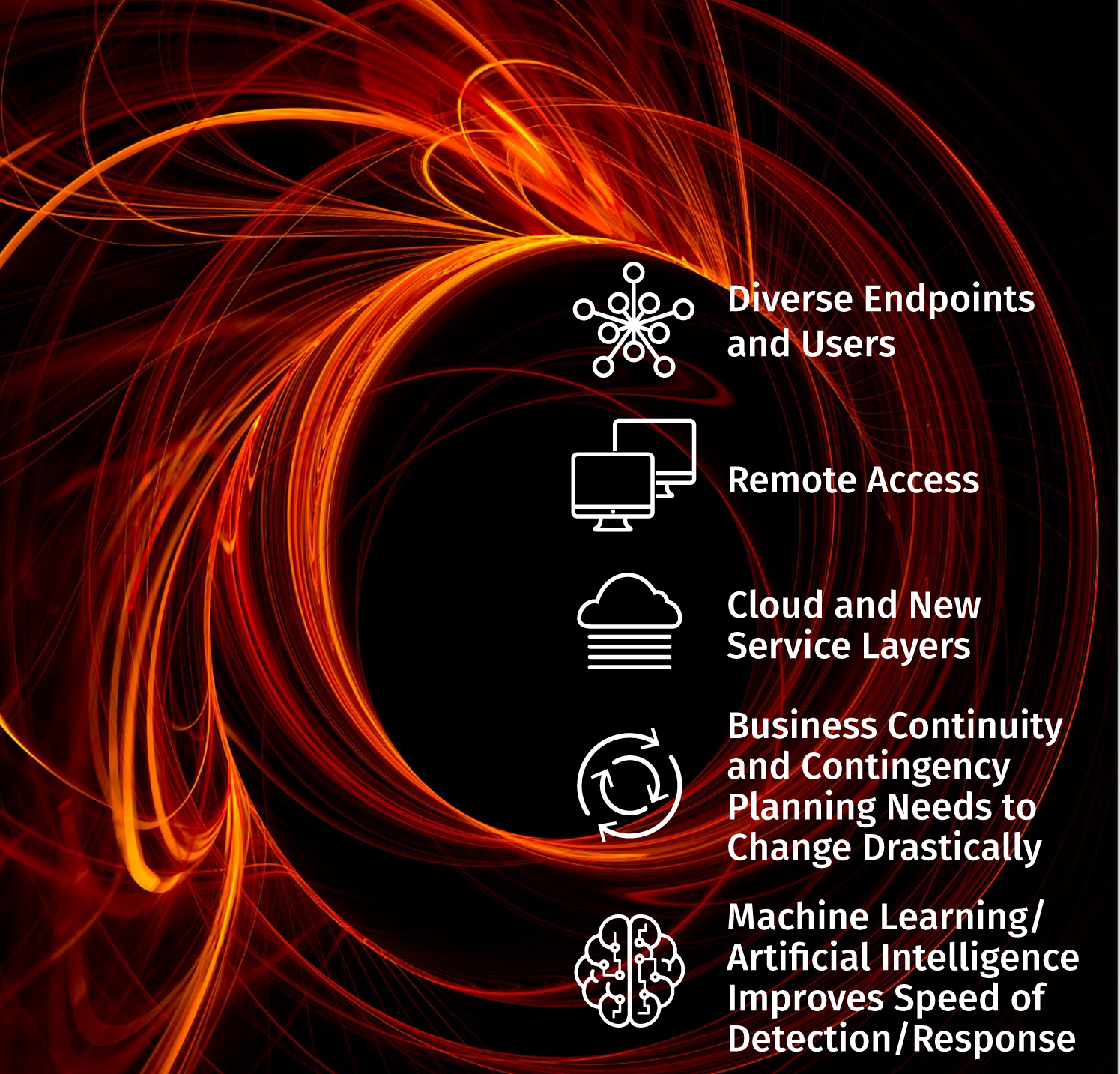

At its heart, zero trust is really a modern take on least privilege access that is dynamically updated and tied to both network and identity-based behaviors and components. For any zero trust project, it’s critical to build a least privilege business case that looks at the various ways a robust zero trust enablement technology can improve access control and organizational security across the board. Let’s take a look at some common business drivers (shown in Figure 3).

Diverse Endpoints and Users

The number and types of endpoints and users/groups functioning within an organization is growing, in some cases rapidly. Especially for large organizations with a massive and diverse set of technologies and user variations in place, choosing a technology that can accommodate all of these could vastly simplify the implementation and maintenance of access control operations for the foreseeable future.

Remote Access

As more organizations shift to remote workforce options, traditional virtual private network (VPN) clients and central technologies are proving limited in helping to differentiate use cases and access models. With more capable endpoint protection and simplified endpoint client installation and support, organizations could easily consolidate remote access strategies to include access controls, device identity and more.

Cloud and New Service Layers

With the drive to hybrid and public cloud deployment models, the need to find technologies that support a wide range of hosting and infrastructure deployment grows rapidly. Today, few zero trust access control platforms have strong support across numerous cloud provider environments, as well as on-premises data centers.

Consolidation and integration and deployment support could easily help to shift least privilege strategy to a unified technology solution that works in all environments.

Business Continuity and Contingency Planning Needs to Change Drastically

Many organizations are now realizing that business continuity and contingency planning needs to better embrace the unexpected and unknown scenarios that could easily occur in the future. There is no way to plan for all scenarios, but embracing more flexible approaches to endpoint technology and rapidly changing business use cases could drive access control models toward a ubiquitous zero trust strategy and technology implementation.

Machine Learning/Artificial Intelligence Improves Speed of Detection/Response

In a nutshell, machine learning means training machines to solve problems. This is most often applied to problems that can be “solved” by repeated training and pattern recognition development. Artificial intelligence (AI) and machine learning techniques can help security professionals and technologies recognize patterns in data; this can be extremely useful at scale. For example, collected threat intelligence data provides perspective on attacker sources, indicators of compromise and attack behavioral trends. Threat intelligence data can be aggregated, analyzed at scale using machine learning and processed for likelihood/ predictability models. These models are then fed back to zero trust access control policies and platforms to dynamically update detection and response capabilities.

Wrap-Up: Where the Zero Trust Model Is Headed

It’s almost a certainty that this market and the offerings for zero trust technology will continue to evolve. Organizations should take the following steps to implement zero trust.

1. First, consider whether a zero trust technology is really something you need at the moment to provide additional application insight and micro-segmentation capabilities. If you feel these tools can benefit you right now, make sure you discuss the implications with regulators and compliance teams. Also, plan to provide a fairly significant amount of operational support for the first 6 to 12 months of

implementation because the discovery process often requires significant coordination as well as tuning among teams as policies are put into enforcement mode.

2. Second, if you’re just starting to consider the market, review the leading vendors in the space, all of which offer varying capabilities and benefits with different types of limitations and drawbacks, too. Some technologies leverage network platforms (firewalls, for example) as the primary enforcement point for zero trust, whereas others rely more on host-based agents. Still others center almost completely on identity.

3. Lastly, keep in mind that, in many ways, the term zero trust is currently more of a conceptual idea than a true technical control. The idea of least privilege assigned to systems and applications, with a dash of whitelisting and “default deny” thrown in for good measure, gets one somewhat close to what zero trust aspires to be. Over time, zero trust technologies will likely complement many core security technologies rather than replace them entirely.

About the Author

Dave Shackleford, a SANS analyst, senior instructor, course author, GIAC technical director and member of the board of directors for the SANS Technology Institute, is the founder and principal consultant with Voodoo Security. He has consulted with hundreds of organizations in the areas of security, regulatory compliance, and network architecture and engineering. A VMware vExpert, Dave has extensive experience designing and configuring secure virtualized infrastructures. He previously worked as chief security officer for Configuresoft and CTO for the Center for Internet Security. Dave currently helps lead the Atlanta chapter of the Cloud Security Alliance.